16. April 2025 By Till Möller

The Speaking Machine: Design and Implementation of a REST Chat Bot

In this blog post, I would like to present the technical details of the GenAI chat-bot integration ‘talking machine’ in the com2m smart product platform. This blog post is the second part of a series. The first part introduced the topic ‘talking machine’, which you can read here.

The use case talking machine is a GenAI showcase. The integration into the com2m smart product platform is intended to provide users with a helpful AI assistant whose tasks include the following areas: querying data such as assets, data points or messages, interpreting data and providing support with troubleshooting. At the moment, the focus is on correct and efficient data retrieval. Further topics such as interpretation and assistance will be implemented afterwards.

Architecture

The chat bot is an experimental extension of the com2m smart product platform and must be integrated into the existing architecture. This integration is done by a new dedicated Spring Boot service, which is based on Spring AI and is referred to as a chat service. This service acts as a link between a user, the other services of the platform and the GenAI model (see Fig. 1).

Figure 1: Integration of the chat service into the existing architecture

The chat service receives and processes user requests via a REST endpoint. To do this, the chat service communicates with a GPT-4o model hosted in Azure OpenAI. To enrich the requests with data, the chat service queries the other platform services, also via REST. Only the data that is currently needed is retrieved.

Figure 2: Two-step processing model (classification and response)

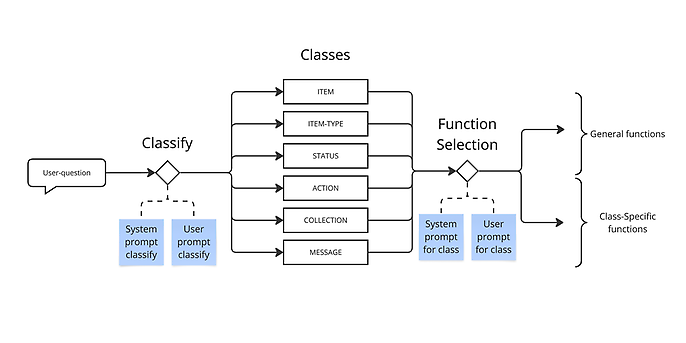

Specifically, the chat service works in a two-step process that is similar to the agent paradigm:

- 1. Categorisation: In the first step, the request is classified into a category (see Fig. 2). This classification is done by the AI model and determines which data retrieval functions are available.

- 2. Processing: In the second stage, the request is sent to the AI model together with the context and a selection of functions. The model selects the appropriate function and sends it back to the chat service. This paradigm is known as function or tool calling. The chat service executes the selected function and requests the required data with the user's rights. The data is then provided to the AI model to formulate an answer. More complex requests can be processed by repeated or different function calls.

The described process is shown schematically in Fig. 3.

Figure 3: Processing of a user question

Context

Large Language Foundation Models like GPT-4o have a comprehensive understanding of language and the information on which they were trained. However, they do not have specific information about the use case in which they are employed. In order for the model to receive the necessary information, the context must be set correctly. The context, including prompt engineering, is thus the most important component for a large language model to function correctly in a specific application environment.

We set the context in various places in the chatbot application. The building blocks that make up the context in this showcase are as follows: chat history, system messages, user messages and function descriptions.

In the case of chat history, the most recent messages are simply added to the context. The feature descriptions are in a JSON format that includes the description of the feature, the individual parameters, and the mandatory parameters. The system messages play the most important role, as they are used to add most of the specific information to the context. There are various types of system messages in this showcase:

- Customer-specific information and rules: This system message can, for example, define the unit to be used for a specific data point.

- Classification and categories: These system messages follow the pattern ‘determination (what task should be roughly fulfilled)’, ‘specialisation (how is the model specialised for the task)’, ‘available data (what information should be used)’ and ‘processing (how should a request be processed)’. The area of available data is dynamically enriched with data at runtime, such as the current time.

- User messages: These contain the user's actual question as well as instructions on what should be done with the user's question (answer, classify).

Careful design and integration of these elements ensures that the model receives the information necessary to provide precise and context-sensitive answers.

Conclusion and outlook

The showcase was quickly implemented by using frameworks such as Spring AI. However, the biggest challenge remains in providing the model with the correct context. Even the adaptation of individual words can significantly influence the overall result. It must be noted that changes can only be tested to a limited extent because LLMs do not work deterministically. Improving the context is an ongoing process in which it is almost impossible to eliminate all errors. Nevertheless, our goal is to make the chatbot as robust and reliable as possible. To achieve this goal, we rely on its use in customer projects to collect feedback and incorporate it into the improvement process. In addition, new extensions are being developed that will make it possible, for example, to upload documents such as manuals or other documentation and to generate answers and recommendations for action based on these documents.